SAIVV: AI Security Advisor at your Service

The current AI software market is estimated at $126 trillion[1], ranging from chatbots that provide customer recommendations, to AI powered claim processing and image-based auto-insurance estimation. However companies and government organisations alike are finding it the hard way that the AI models are only as good as the data and the process used to train them, as the predictions generated have been found to be not only incorrect but also contained biases which are discriminatory.

To set the scene, the Department for Work and Pensions (DWP)’s AI-powered benefit fraud detection system incorrectly flagged over 200,000 people for benefit fraud leading to around £4.4 million wasted on unnecessary investigation[2]. By the same token Air Canada was sued by a customer after the latter received incorrect advice from its chatbot regarding bereavement fee claims[3]. Finally REACH VET, an AI-powered system designed to prevent suicides amongst veterans, was found to prioritise male veterans over female veterans in providing much-needed support[4].

[1] https://www.statista.com/statistics/607716/worldwide-artificial-intelligence-market-revenues/

[2] https://www.theguardian.com/society/article/2024/jun/23/dwp-algorithm-wrongly-flags-200000-people-possible-fraud-error

[3] https://www.theregister.com/2024/02/15/air_canada_chatbot_fine/

[4] https://www.military.com/daily-news/2024/05/23/vas-veteran-suicide-prevention-algorithm-favors-men.html

Various governmental organisations such as the European Union have developed the EU AI Act, to encourage ethical and responsible AI adoption. Expected to come into force later in the year, part of the Act requires AI models to undergo periodic evaluations through adversarial testing and for AI engineers to provide documentations of the results. With most of the AI applications developed in-house, how can companies test their AI models for potential vulnerabilities and biases to ensure compliance? This is where our SAIVV platform comes into play.

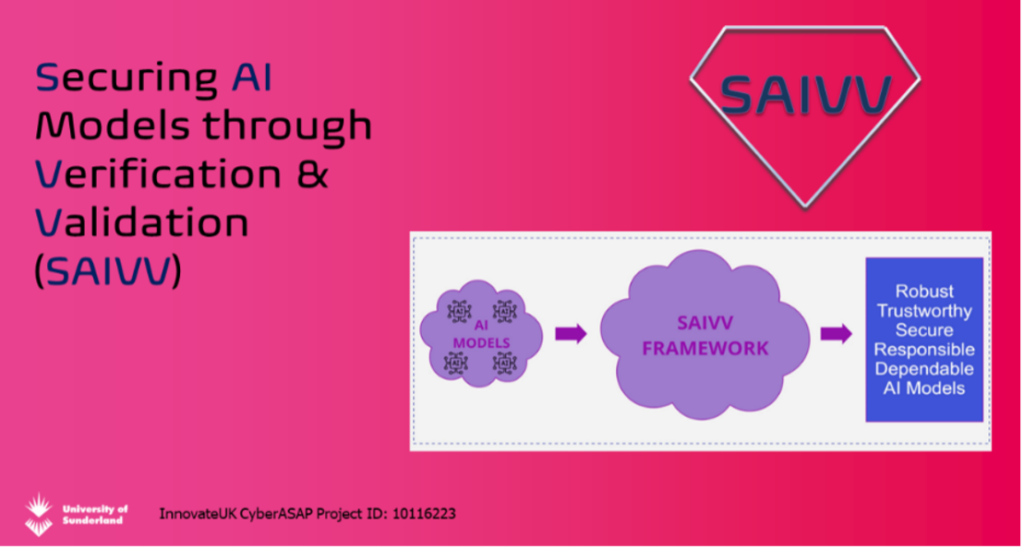

SAIVV is a ground-breaking web-based platform that allows organisations to thoroughly assess and fortify their AI applications before being released to the outside world. Funded by the UKRI CyberASAP startup accelerator programme, it uses Explainable AI (XAI) along with AI model validation techniques to empower organisations to:

- Evaluate the security and privacy of their AI applications;

- Detect their AI applications for presence of vulnerabilities and prediction biases;

- Monitor the training data used for presence of any poisoned data.

We would like to speak with you and understand your organisational AI security needs, to understand how we can tailor SAIVV to your needs. More specifically we’d love to have a 15 minute chat over the next couple of weeks to get your thoughts around:

- How AI is used in your business operations;

- What your thoughts are around AI security and ethics;

- How you think SAIVV can support your business operations.

For your participation, we will provide a 3 month free organisational cyber and AI consultancy. We will work with you to improve your organisational cyber and AI adoption strategies. This will be done under strict Non-Disclosure Agreements at the start of consultancy.

If you are interested in talking to us, please contact Dr. Thomas Win (thomas.thu_yein@sunderland.ac.uk) to get started.

Let’s work towards a future where trustworthy and responsible AI is the way of life and not an exception!

written by:

Dr. Thomas Win

Associate Head of School of Computer Science

Faculty of Technology