In this story we will describe how you can create complex chain workflows using LangChain (v. 0.0.190) with ChatGPT under the hood. This story is a follow up of a previous story on Medium and is built on the ideas of that story.

LangChain has a set of foundational chains:

- LLM: a simple chain with a prompt template that can process multiple inputs.

- RouterChain: a gateway that uses the large language model (LLM) to select the most suitable processing chain.

- Sequential: a family of chains which processes input in a sequential manner. This means that the output of the first node in the chain, becomes the input of the second node and the output of the second, the input of the third and so on.

- Transformation: a type of chain that allows Python function calls for customizable text manipulation.

A Complex Workflow

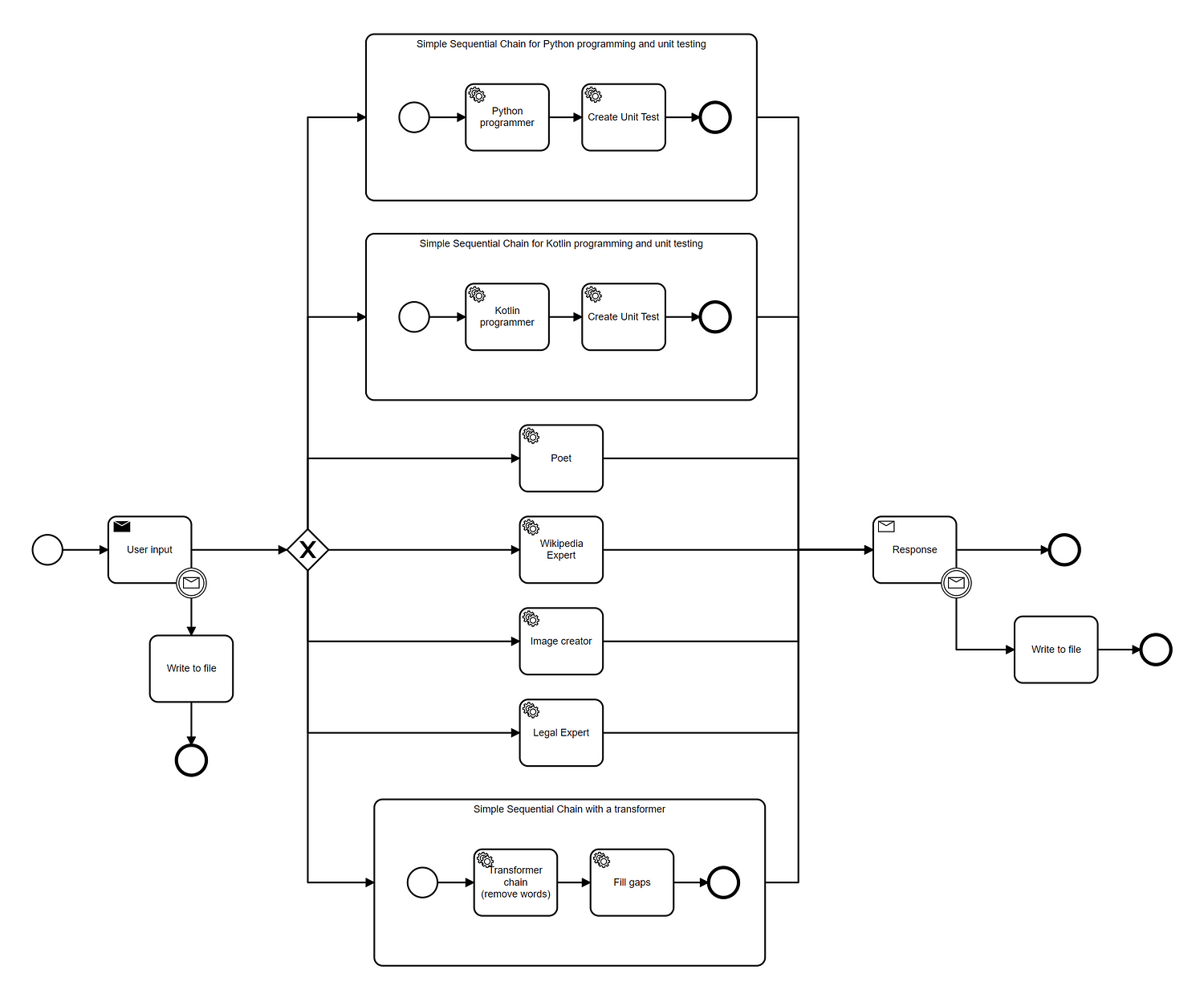

In this story we are going to use all foundational chains to create the following workflow as a simple command line application:

This flow performs the following steps:

- Receive the user input

- The input is written to a file via a callback.

- The router selects the most appropriate chain from five options:

- Python programmer (provides a solution and a unit test using a sequential chain)

- Kotlin programmer (provides a solution and a unit test using a sequential chain)

- Poet (simple LLMChain that provides a single response)

- Wikipedia Expert (simple LLMChain)

- Graphical artist (simple LLMChain)

- UK, US Legal Expert (simple LLMChain)

- Word gap filler (contains a sequential chain that transforms the input and fills the gaps) - The large language model (LLM) responds.

- The output is again written to a file via a callback.

As you can see the main router chain triggers simple LLMChain’s, but also SimpleSequentialChain’s.

Flow Implementation

We have published a Python implementation of the flow described above in this Github repository:

GitHub - gilfernandes/complex_chain_playground: Playground project acting as an example for a…

Playground project acting as an example for a complex LangChain workflow - GitHub …

If you want to play around with it you can clone the repository and then install the needed libraries using Conda with Mamba.

Here is the script we have used to install the necessary libraries:

conda create --name langchain2 python=3.10

conda activate langchain2

conda install -c conda-forge mamba

mamba install openai

mamba install langchain

mamba install prompt_toolkitYou will need a ChatGPT key installed in your environment for the script to work.

In Linux you can execute a script like this one to setup the ChatGPT key:

export OPENAI_API_KEY=<key>You can then activate the Conda environment and run the script:

conda activate langchain2

python ./lang_chain_router_chain.pyExample Output

We have executed the script with some questions and captured a transcript in this file.

Here are some prompts we used as input and the corresponding agents that were triggered:

Can you fill in the words for me in this text? Reinforcement learning (RL) is an area of machine

learning concerned with how intelligent agents ought to take actions in an environment

in order to maximize the notion of cumulative reward.

Reinforcement learning is one of three basic machine learning paradigms,

alongside supervised learning and unsupervised learning.- word filler

removes every third word and then fills the gaps

What are the main differences between the UK and US legal systems

in terms of the inheritance tax?- legal expert

generates an explanation with a comparison of the laws

Can you write a Python function which returns the list of days

between two dates?- python programmer

generates the code and then the unit test

Can you write a Python function which implements the Levenshtein distance

between two words?- python programmer

generates the code and then the unit test

Can you write a Kotlin function which converts two dates in ISO Format

(like e.g. '2023-01-01') to LocalDate and then calculates the number of days

between both?- kotlin programmer

generates the code and then the unit test

Can you please write a poem about the joys of software development

in the English country side?- poet

generates a poem:

In the realm of code, where logic doth reside

Amidst the verdant fields, software doth abide.

Where bytes and bits dance with the gentle breeze,

In the English countryside, a programmer finds ease.

Can you generate an image with the output of a sigmoid function

and its derivative?- graphical artist

generates an SVG image (not very accurate)

Can you explain to me the concept of a QBit in Quantum Computing?- wikipedia expert

generates a decent explanation of the topic

Implementation Details

The project contains a main script that sets up the chains and executes them: complex_chain.py. We have other files in the project, like FileCallbackHandler.py which is an implementation of a call back handler used to write the model input and output into an HTML file.

We are going to focus here on complex_chain.py.

complex_chain.py sets up the model first:

class Config():

model = 'gpt-3.5-turbo-0613'

llm = ChatOpenAI(model=model, temperature=0)It declares a special variation of langchain.chains.router.MultiPromptChain, because we could not use them together with langchain.chains.SimpleSequentialChain:

class MyMultiPromptChain(MultiRouteChain):

"""A multi-route chain that uses an LLM router chain to choose amongst prompts."""

router_chain: RouterChain

"""Chain for deciding a destination chain and the input to it."""

destination_chains: Mapping[str, Union[LLMChain, SimpleSequentialChain]]

"""Map of name to candidate chains that inputs can be routed to."""

default_chain: LLMChain

"""Default chain to use when router doesn't map input to one of the destinations."""

@property

def output_keys(self) -> List[str]:

return ["text"]It then generates all chains (including a default chain) and adds them to a list:

def generate_destination_chains():

"""

Creates a list of LLM chains with different prompt templates.

Note that some of the chains are sequential chains which are supposed to generate unit tests.

"""

prompt_factory = PromptFactory()

destination_chains = {}

for p_info in prompt_factory.prompt_infos:

name = p_info['name']

prompt_template = p_info['prompt_template']

chain = LLMChain(

llm=cfg.llm,

prompt=PromptTemplate(template=prompt_template, input_variables=['input']),

output_key='text',

callbacks=[file_ballback_handler]

)

if name not in prompt_factory.programmer_test_dict.keys() and name != prompt_factory.word_filler_name:

destination_chains[name] = chain

elif name == prompt_factory.word_filler_name:

transform_chain = TransformChain(

input_variables=["input"], output_variables=["input"], transform=create_transform_func(3), callbacks=[file_ballback_handler]

)

destination_chains[name] = SimpleSequentialChain(

chains=[transform_chain, chain], verbose=True, output_key='text', callbacks=[file_ballback_handler]

)

else:

# Normal chain is used to generate code

# Additional chain to generate unit tests

template = prompt_factory.programmer_test_dict[name]

prompt_template = PromptTemplate(input_variables=["input"], template=template)

test_chain = LLMChain(llm=cfg.llm, prompt=prompt_template, output_key='text', callbacks=[file_ballback_handler])

destination_chains[name] = SimpleSequentialChain(

chains=[chain, test_chain], verbose=True, output_key='text', callbacks=[file_ballback_handler]

)

default_chain = ConversationChain(llm=cfg.llm, output_key="text")

return prompt_factory.prompt_infos, destination_chains, default_chainIt sets up the router chain:

def generate_router_chain(prompt_infos, destination_chains, default_chain):

"""

Generats the router chains from the prompt infos.

:param prompt_infos The prompt informations generated above.

:param destination_chains The LLM chains with different prompt templates

:param default_chain A default chain

"""

destinations = [f"{p['name']}: {p['description']}" for p in prompt_infos]

destinations_str = '\n'.join(destinations)

router_template = MULTI_PROMPT_ROUTER_TEMPLATE.format(destinations=destinations_str)

router_prompt = PromptTemplate(

template=router_template,

input_variables=['input'],

output_parser=RouterOutputParser()

)

router_chain = LLMRouterChain.from_llm(cfg.llm, router_prompt)

multi_route_chain = MyMultiPromptChain(

router_chain=router_chain,

destination_chains=destination_chains,

default_chain=default_chain,

verbose=True,

callbacks=[file_ballback_handler]

)

return multi_route_chainFinally it contains a main method which allows some user interaction:

if __name__ == "__main__":

# Put here your API key or define it in your environment

# os.environ["OPENAI_API_KEY"] = '<key>'

prompt_infos, destination_chains, default_chain = generate_destination_chains()

chain = generate_router_chain(prompt_infos, destination_chains, default_chain)

with open('conversation.log', 'w') as f:

while True:

question = prompt(

HTML("<b>Type <u>Your question</u></b> ('q' to exit, 's' to save to html file): ")

)

if question == 'q':

break

if question in ['s', 'w'] :

file_ballback_handler.create_html()

continue

result = chain.run(question)

f.write(f"Q: {question}\n\n")

f.write(f"A: {result}")

f.write('\n\n ====================================================================== \n\n')

print(result)

print()Final Thoughts

LangChain allows the creation of really complex interaction flows with LLMs.

However setting up the workflow was a bit more complicated that imagined, because langchain.chains.router.MultiPromptChain does not seem to get well along with langchain.chains.SimpleSequentialChain. So we needed to create a custom class to create the complex flow.