In this story, we are going to explore how you can convert old REST interfaces into a Chatbot using plain ChatGPT Function calling. There are lots of data centric REST API’s out there and many of them are used in the context of websites to list or to perform searches on all types of datasets. The main idea here would be to create chat based experiences based on existing REST interfaces. So we are going to add a conversational layer powered by ChatGPT on top of an existing REST interface which performs searches on an event database.

We are also going to explore how you can create your chatbot UI from scratch together with a Python based server. So we will not be using any pre-built open source library to build the UI. The server will be written in Python and the web based user interface will be written using Typescript and React.

Event Chat — A REST Conversion Project

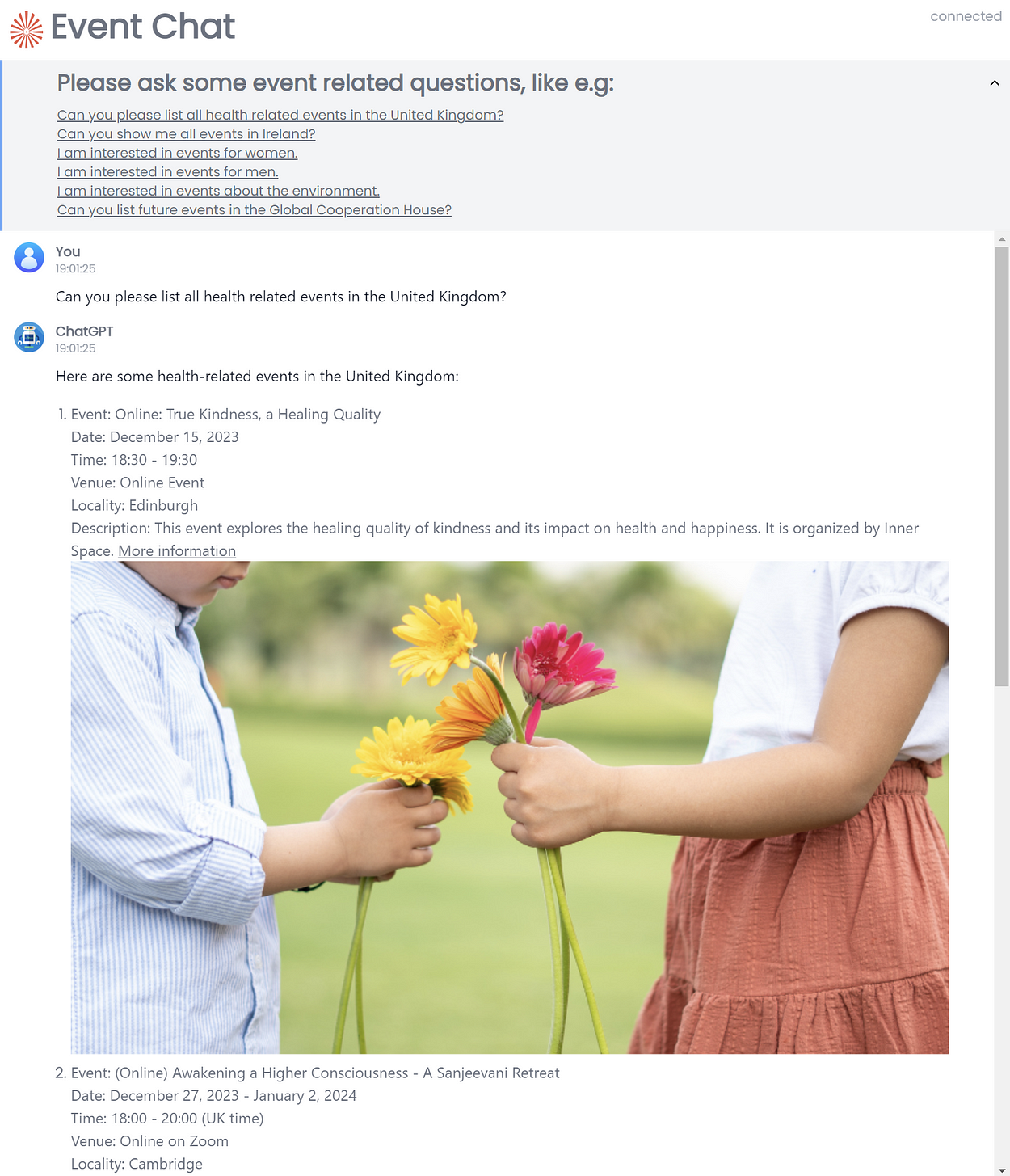

In order to understand how you can convert a REST interface into a chatbot, we have built a small event chat application. This application receives a user question about upcoming events and answers the questions with a list of details about the events including images and links.

Event Chat screenshot

This application supports streaming and replies with external links and images that are stored in a database.

Application Architecture

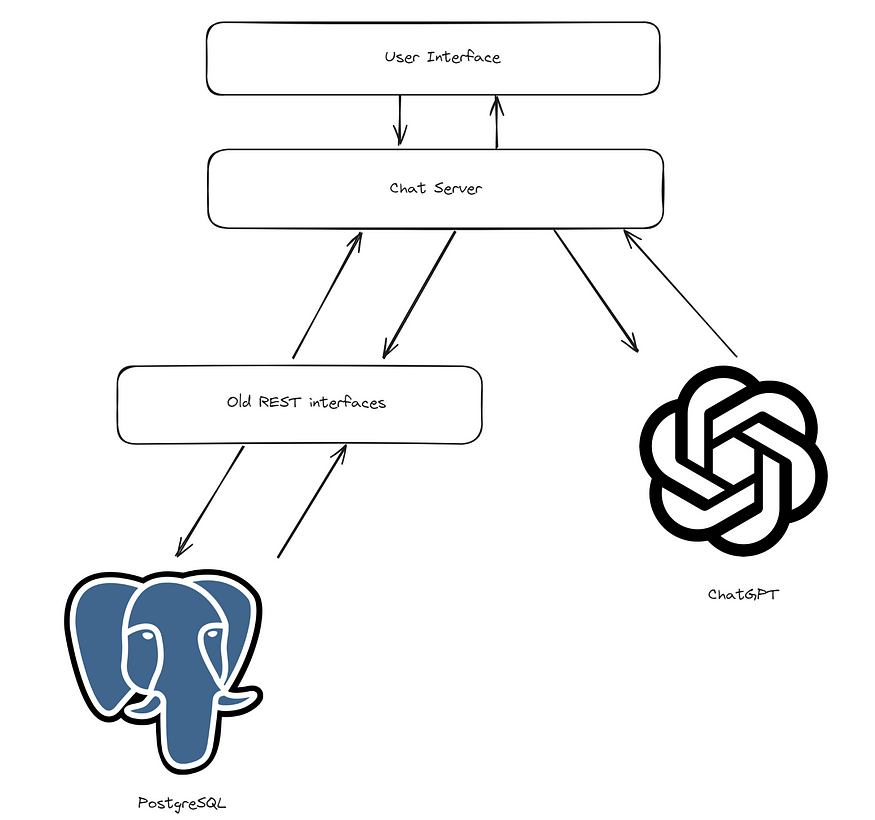

Here is a diagram representing the rough architecture of the application we have built:

High Level components

We have the following components in this application:

User interface written using the React framework using Typescript. The user interface uses web sockets to communicate with the chat server. The input is just a question about some events.

A chat server written in Python containing an http server that also uses websockets to communicate with the client. The chat server orchestrates the interaction with ChatGPT and old school REST interfaces to answer the user questions.

ChatGPT which analyses the user question and routes it to the correct function and then receives the results from the REST interface to produce the final output.

REST Interfaces that search for events and enrich the retrieved events with some extra data

A PostgresSQL database which provides the data for the REST interfaces. Actually we search using a Lucene index, but the data in it comes from a PostgresSQL database.

Application Workflow

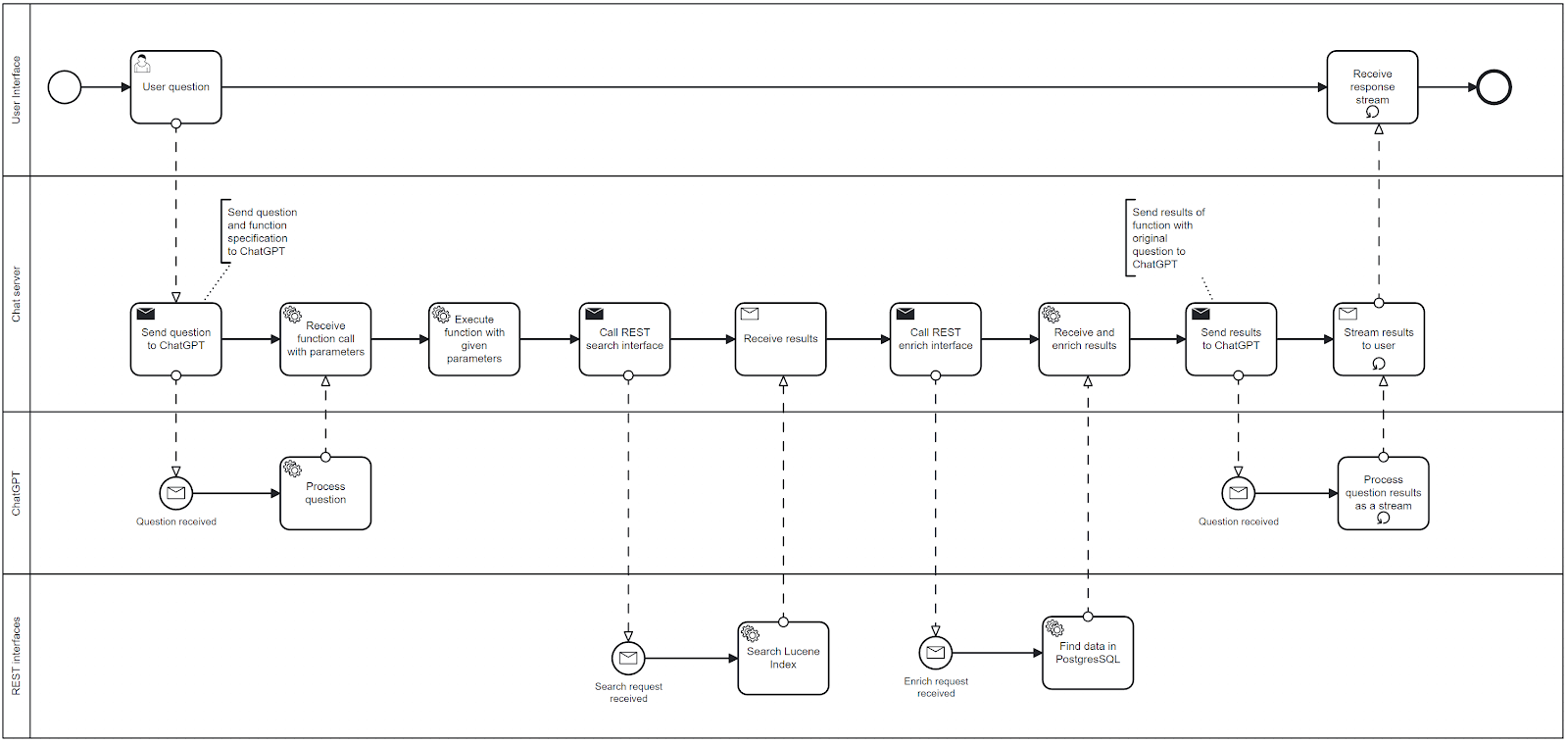

This is the workflow which processes a single chat request:

Single event request workflow

Single event request workflow

Please note that we have omitted error boundaries in the diagram.

The workflow has 4 participants (pools):

The user who only asks a question and receives a response to a question.

The chat server that orchestrates most of the actions between all other participants.

ChatGPT which receives the initial questions, figures out the function calls and receives the results of function calls to format these into natural language.

REST Interfaces — there are only two of them: one is a simple search interface and then there is a second which enriches the initial response with URLs of web pages and images.

Chat Server Workflow in Detail

The main actions happen in the chat server layer. Here are the details about the workflow in this pool (happy path only):

Initially it receives the question from the client and that should typically be a question about events.

The question is sent with a function call request to ChatGPT 3.5 (gpt-3.5-turbo-16k-0613)

If no error occurs, ChatGPT receives the function name with the parameters extracted from the user’s question.

The function specified by ChatGPT is called (at this stage, this is only an event search REST interface).

The results are returned from the REST interface in JSON format.

A second call to REST interfaces happens to enrich the current data. The purpose of this call is to enrich the initial search results with URL’s and images.

ChatGPT is then called a second time with the reference to the previously called function and the enriched data

ChatGPT replies after processing the JSON data with natural language. The results are actually streamed to the client. As soon as ChatGPT generates a token, it gets streamed to the client via websockets.

How does Function Calling work?

Function calling has been introduced in June 2023 to help developers to receive structured JSON with descriptions of locally callable functions. It turns out that function calling is excellent to integrate ChatGPT with REST interfaces, because it helps to increase the ChatGPT’s output reliability.

When you use function calling with ChatGPT models like e.g. gpt-3.5-turbo-16k-0613 or gpt-4–0613 you initially send a message, the specification of a function and a prompt. In a happy scenario ChatGPT responds with the function which is to be called.

In a second step you call the function specified by ChatGPT with the extracted parameters and get the result in some text format (like i.e. JSON).

In the final step you send the output of the called function to ChatGPT, together with the initial prompt. And ChatGPT produces the final answer using natural language.

So there are 3 steps:

Query ChatGPT to get the function call and its parameters. You send the user prompt and the function specifications in a JSON based format.

Call the actual Python function with the parameters extracted by ChatGPT, which are extracted from the user prompt.

Query ChatGPT with the function output and then initial user prompt to get the final natural language based output.

Example

In our case we have a function with this signature:

def event_search(

search: str,

locality: Optional[str] = "",

country: Optional[str] = "",

tags: List[str] = [],

repeat: bool = True,

) -> str:

...

When the user asks for example:

Can you find events about meditation in the United Kingdom?

ChatGPT will extract the corresponding two function parameters in the first call (step 1):

search: “meditation”

country: “United Kingdom”

The function will then be called with these two parameters (step 2) and it will then call the REST interface that returns JSON based response. This JSON response will then be sent back to ChatGPT together with the original question (step 3).

In step 3 the chat completion API will be called with 3 messages:

System message (background information about the ChatGPT request)

The original user prompt

The function call with the extracted JSON

Note that the last message has the role “function” and contains the JSON content in the variable “content”. The full code of the function which extracts the parameters for this last call to the ChatGPT completion API can be found here in function extract_event_search_parameters in https://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py

Event Chat Implementation

The Event Chat prototype contains two applications. The first application is the back-end application written in Python and can be found in this repository:

https://github.com/onepointconsulting/event_management_agent

The second application is the front-end application written in Javascript and React:

https://github.com/onepointconsulting/chatbot-ui

Back-End Code

The back-end code consists of a websocket based server implemented using mainly python-socketio (implements a transport protocol that enables real-time bidirectional event-based communication between clients) and aiohttp (an asynchronous HTTP Client/Server for asyncio and Python).

The main web socket server implementation can be found in this file:

The function which handles the user’s question is this one:

This is the function which triggers the whole workflow on the chat server. It receives the question from the client, triggers the event search and sends the data stream to the client. It also ensures that in case of an error the stream is properly closed.

This method calls two other methods:

process_search — this method processes the incoming question up to the point in which the search via the REST API is executed.

aprocess_stream — this method receives the search result and returns an iterable that allows streaming to the client.

These methods are to be found in this file: https://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py

You can find the process_search method here: https://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py#L115

This method contains first a call to event_search_openai the main function which performs all calls to the OpenAI function calls: https://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py#L53

The process_search method contains also a call to execute_chat_function which takes the completion_message produced by the OpenAI function and finds the function using the Python’s evalmethod and finally executes the event_search function: https://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py#L89

This is the method which handles the result of the second call to event_search_openai in process_search. It loops most of the time through the stream of tokens produced by the OpenAI API: https://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py#L176

Front-End Code

The main component with the chat interface is:

https://github.com/onepointconsulting/chatbot-ui/blob/main/src/components/MainChat.tsx

Apart from rendering the UI it uses a webhook useWebsocket, which contains the code that handles the websocket based event handlers using the socket.io-client library. The implementation of the useWebsockethook can be found in this file: https://github.com/onepointconsulting/chatbot-ui/blob/main/src/hooks/useWebsocket.ts

Observations

This project is just meant as a small prototype and a learning experience and could be improved in many ways — especially the user interface would need a better and more professional design and lacks features like e.g. chat history.

Takeaways

If you have REST interfaces that allow you to search your data, then you should be able to build chat interfaces with them. ChatGPT’s Function Calling is a great way to stack a chat layer on top of these search interfaces. You do not need a very powerful model for that, you can operate with an older model like gpt-3.5-turbo-16k-0613 and still provide a good user experience.

Writing your own chat user interface is also an interesting experience and allows you to create much more customized implementations — when compared to using projects like e.g Chainlit or Streamlit. Projects like Chainlit offer you a ton of functionality and help you to get up and running really quickly, but at a certain stage constrain your design choices in case you do a lot of UI customization. Writing your own chat user interface though requires some proficiency with Typescript or Javascript and some UI framework, like ReactJs, VueJs or Svelte.

If you want to have chat streaming, then you should definitely use the web socket protocol which also allows you to keep a chat memory in a rather convenient way, due to its in-built web socket session.

My last takeaway is also that you do not always need to use LangChain whenever you want to interface with LLM models. LangChain comes in very handy as a layer on top of many LLM APIs, but at the cost of a large dependency tree. If you really know that you are only going to use a very specific functionality of a specific LLM, then you should perhaps consider not using LangChain.

Gil Fernandes, Onepoint Consulting