In this story, we will focus on how to build an ElasticSearch agent in Python using the infrastructure provided by LangChain. This agent should allow a user to ask questions in natural language about the data in an ElasticSearch cluster.

ElasticSearch is a powerful search engine that supports lexical and vector search. ElasticSearch can be used in the context of a RAG (Retrieval Augmented Generation) but that is not our topic in this story. So we are not using ElasticSearch to retrieve documents to create a context which is injected in a prompt. Instead we are using ElasticSearch in the context of an Agent, i.e. we are building an agent which communicates in natural language with ElasticSearch and performs searches and aggregate queries and interprets these queries.

ElasticSearch Agent in Action

We have uploaded a demo of the ElasticSearch Agent on Youtube:

Here are some screenshots of the UI which we created with Chainlit:

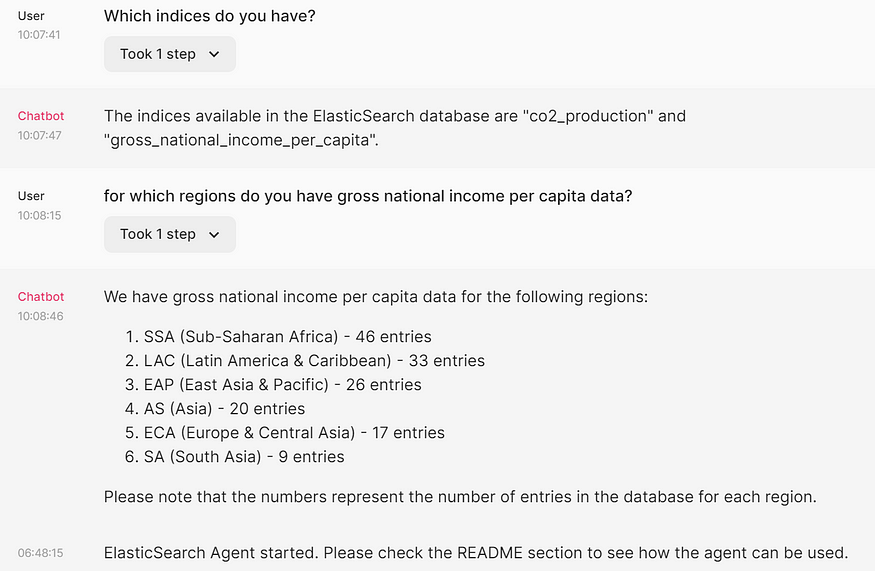

Interaction number 1

Interaction number 2

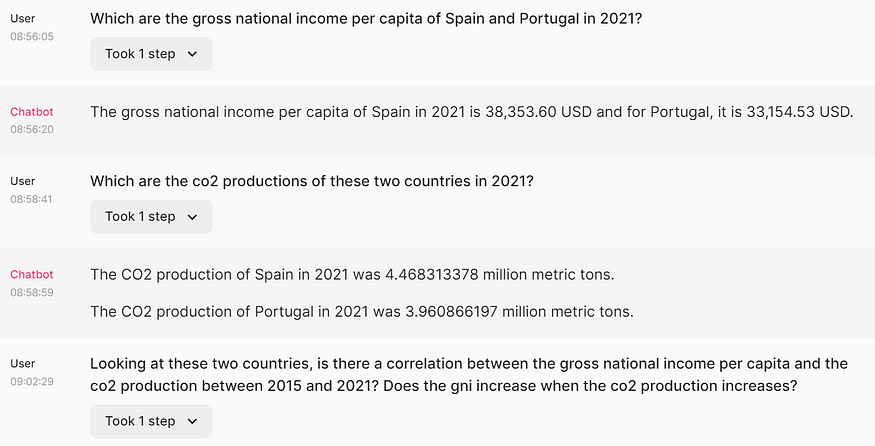

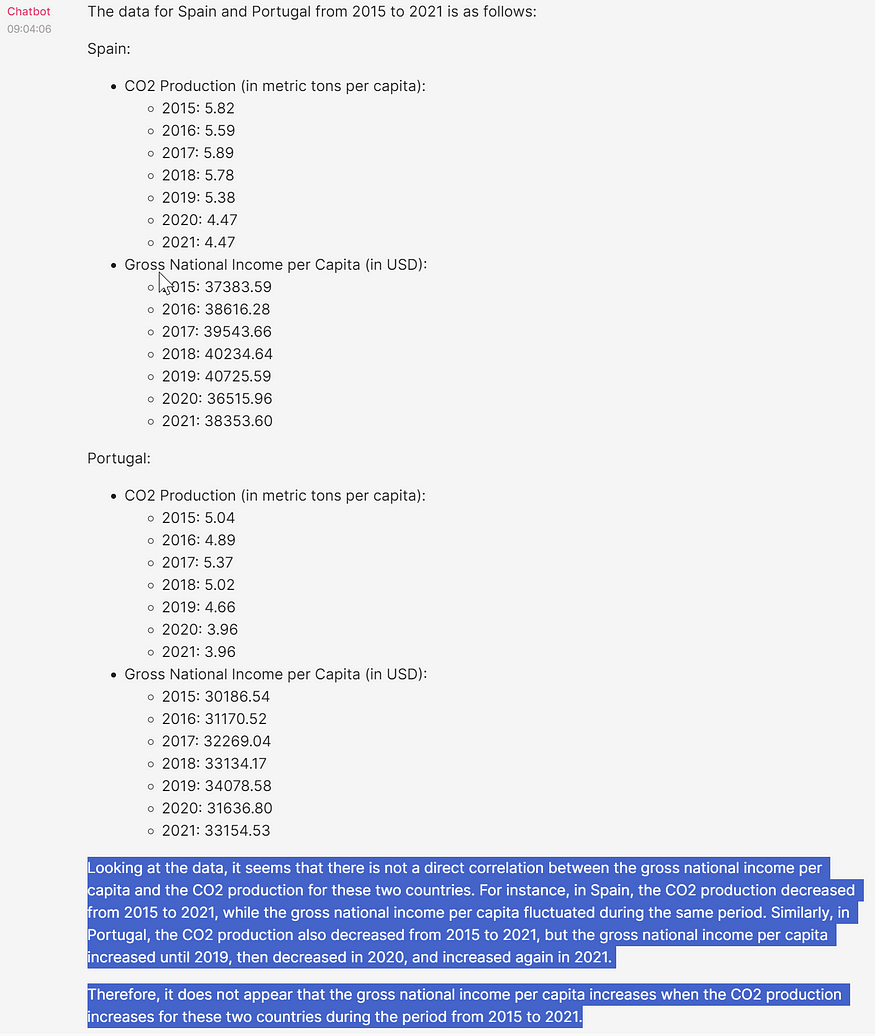

Interaction number 3

Interaction number 4

Recipe for Building the Agent

If we look at the bot from the perspective of how the agent is to be “cooked” we would have the following ingredients:

- LLM (Large Language Model): we have used ChatGPT 4 8K model. We have also tried ChatGPT 3.5 16K model, but the results were not that great.

- 4 self made agent tools:

- elastic list indices: a tool for getting all available ElasticSearch indices

- elastic index show details: a tool for getting information about a single ElasticSearch index

- elastic index show data: a tool for getting a list of entries from an ElasticSearch index, helpful to figure out what data is available.

- elastic search tool: this tool executes a specific query on an ElastiSearch index and returns all hits or aggregation results - Specialised prompting: we used some special instructions to get the agent to work properly. The prompt instructs the agent to get the names of the indices first and then to get the index field names. The main prompt without memory-related instructions is:

f"""

Make sure that you query first the indices in the ElasticSearch database.

Make sure that after querying the indices you query the field names.

Then answer this question:

{question}

"""

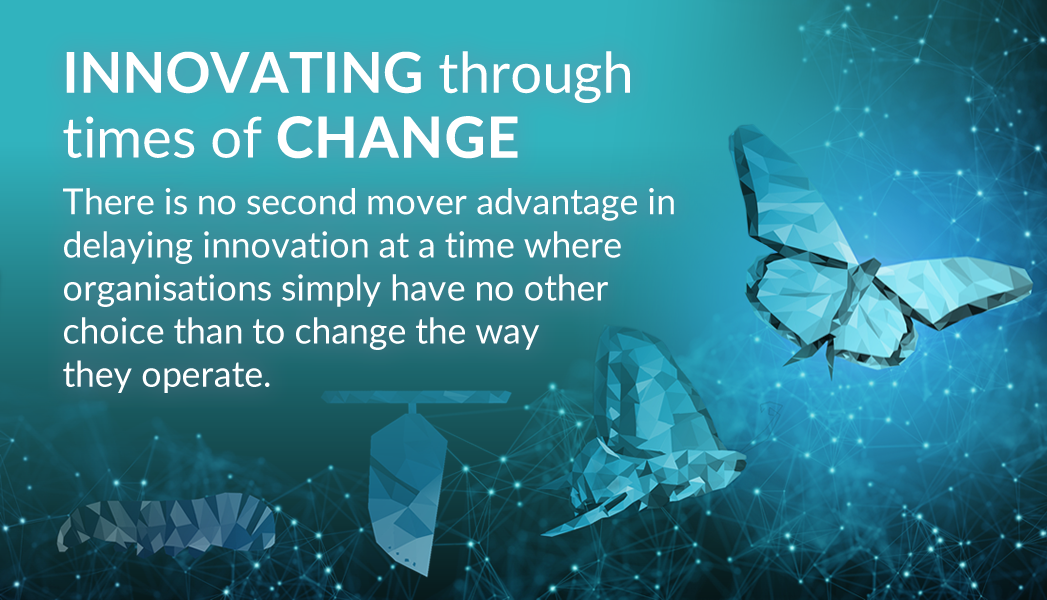

The Agent Workflow

ElasticSearch Agent Workflow

The workflow has two parts:

- Setup — executes three steps:

- initialize tools

- setup LLM model

- setup agent including prompts - Execution flow — these are the workflow steps:

- user asks a question

- LLM analyses the question

- Gateway: decide which tool to use. In some cases there might not be any tool for the task.

- Gateway Case 1: tool found — a tool is executed and the its observation is received. This is a JSON response in our case.

- Gateway Case 2: no tool found — the workflows ends with an error message.

- In the case that a tool was found: the tool’s observation is sent back to the LLM.

- Gateway: decide which tool to use or whether no tool found and the flow terminates or whether we have our final answer. If the decision is to use the tool we will loop through the same steps again.

The execution flow is cyclic until a final answer is found. This means that for one question the agent can access multiple tools or even the same tool multiple times.

Typically the workflow stops after 15 interactions with LLM with an error.

Implementation

We have divided the code in two Github repositories. The first one contains just the agent code and the second one the UI we used to the demo you can see in the video.

Agent Code

Here is the Github repository:

https://github.com/onepointconsulting/elasticsearch-agent

The setup requires you to use Conda and Poetry (dependency manager), but is described on this project’s README file.

The data used in my tests and the video demo can be found in this directory and was obtained from the Global Socio-Economic & Environmental Indicators Kaggle dataset.

We have used the CSV related to the CO2 production and the gross national income.

Most of the actual code is in the following folder:

https://github.com/onepointconsulting/elasticsearch-agent/tree/main/elasticsearch_agent

The code contains three logical units:

- Configuration

- Agent Tools

- Agent factory

The configuration parameters are all listed in the project’s README and are read from an .env file, which you will need to create locally.

The configuration file contains the actual connection object to the ElasticSearch cluster which is used by all tools.

The agent tools are all LangChain based and all of them have the input schema specified using by extension of Pydantic’s BaseModel class. The reason for specifying the input schema is due to the type of the agent which is based on OPENAI_FUNCTIONS.

Here is the list of the tools:

- list indices tool: this tool lists the ElasticSearch indices and is typically called every time the agent answers any question. This tool receives a separator as input and outputs a list of indices separated by it.

- index details tool: this tool lists the aliases, mappings and settings of a specific index. It receives as its input an ElasticSearch index name.

- index data tool: this tool is used for getting a list of entries from an ElasticSearch index, helpful to figure out what data is available. According to my testing this is the least used tool by ChatGPT.

- index search tool: this tool is the search tool and expects as input the index, the query and the start and length of the query. It parses the query and tries to figure out if the query is a search or an aggregation query and depending on that returns either the hits (in case of a search) or the aggregations (in case of an aggregation query). But it also tries to avoid going above a certain threshold in terms of the token size of the response and so might retry the queries. This is the main method of this tool.

In this file you can find the input model (SearchToolInput) and the run method of this tool (elastic_search):

Finally, we have the agent_factory method, which is responsible for initialising the agent executor which you can find in this file in linee 18:

All the tools we mentioned above are meant to use OpenAI functions, hence the agent type is OPENAI_FUNCTIONS . The initialization code injects a specialised system message in the agent.

However, we did not inject any specialised instructions via the agent_factory function. I have tried to use extra_prompt_messages parameter of the OpenAIFunctionsAgent.from_llm_and_tools function, but that did not work out well.

I have just wrapped the question every time with the agent instructions.

f"""

Make sure that you query first the indices in the ElasticSearch database.

Make sure that after querying the indices you query the field names.

Then answer this question:

{question}

"""

Agent UI

https://github.com/onepointconsulting/elasticsearch-agent-chainlit

This project relies on the agent code project. The README file of this project explains how to build this project using Conda and Poetry.

The UI code can be found in this file:

The most interesting part is the message handling the incoming messages:

The prompt used in this function contains up to 5 previous questions of the user. It is a simple attempt to have memory in terms of only the questions. It also contains the instructions to get the indices and details from ElasticSearch on each question.

Takeaways

The agent seems to work reasonably well, however it is not perfect. Sometimes it fails to deliver the expected results in surprising ways.

I could also not get it to work well with ChatGPT 3.5 only with ChatGPT 4. Thus it is quite expensive to run. It also suffers from the 8K window size to ChatGPT 4 (gpt-4–0613), so if an ElasticSearch answer has more than 8K tokens, it is trimmed down to around 6K tokens and so there is data loss.

It is also somehow inefficient. Every time the agent is run, it repeats the initial instructions:

Make sure that you query first the indices in the ElasticSearch database.

Make sure that after querying the indices you query the field names.

We could cache these instructions and embed them afterwards to save some time and money.

The LangChain implementation of the SQL Agent — which inspired me — also queries the tables and fields again and again. This costs money and slows down the whole agent.

LangChain is a very complete framework, but sometimes I could not use its features properly and chose some shortcuts. For example: I have tried ConversationBufferMemory . But it did not work out well — the agent got confused quite a good number of times — , so I decided to manage the memory by myself using a couple of lines of code. It is a bit of a hack, but easy to understand and control.