In this story we are going to explore how you can create a simple web-based chat application that communicates with a private REST API, uses OpenAI functions and conversational memory.

We will be using again the LangChain framework which provides a great infrastructure for interacting with Large Language Models (LLM).

The Agent we are going to describe in this post is going to use these tools:

Wikipedia with LangChain’s WikipediaAPIWrapper

DuckDuckGo Search with LangChain’s DuckDuckGoSearchAPIWrapper

Pubmed with PubMedAPIWrapper

LLM Math Chain with LLMMathChain

Events API with a custom implementation which we describe later.

The agent will have two user interfaces:

A Streamlit based tweb client

A command line interface

OpenAI functions

One of the main problems when dealing with responses from a LLM like ChatGPT is that the responses are not completely predictable. When you try to parse the responses there might be slight variations in the output which make parsing by programmatic tools error prone. Since LangChain agents send user input to an LLM and expect it to route the output to a specific tool (or function), the agents need to be able to parse predictable output.

To address this problem OpenAI introduced on June 13th of 2023 “Function Calling” which allows developers to describe the JSON output describing which functions (in LangChain speak: tools) to call based on a specific input.

The approach initially used by LangChain agents — like e.g. the ZERO_SHOT_REACT_DESCRIPTION agent — uses prompt engineering to route messages to the right tools. This approach is potentially less accurate and slower than the approach using OpenAI functions.

As of the time of writing this blog the models which support this feature are:

gpt-4–0613

gpt-3.5-turbo-0613 (this includes gpt-3.5-turbo-16k-0613, which we used for your playground chat agent)

Here are some examples given by OpenAI on how function calling works:

Convert queries such as “Email Anya to see if she wants to get coffee next Friday” to a function call like send_email(to: string, body: string), or “What’s the weather like in Boston?” to get_current_weather(location: string, unit: 'celsius' | 'fahrenheit')

Internally the instructions about the function and its parameters are injected into the system message.

The API endpoint is:

POST https://api.openai.com/v1/chat/completions

And you can find the low level API details here:

OpenAI Platform

Explore developer resources, tutorials, API docs, and dynamic examples to get the most out of OpenAI's platform.

The Agent Loop

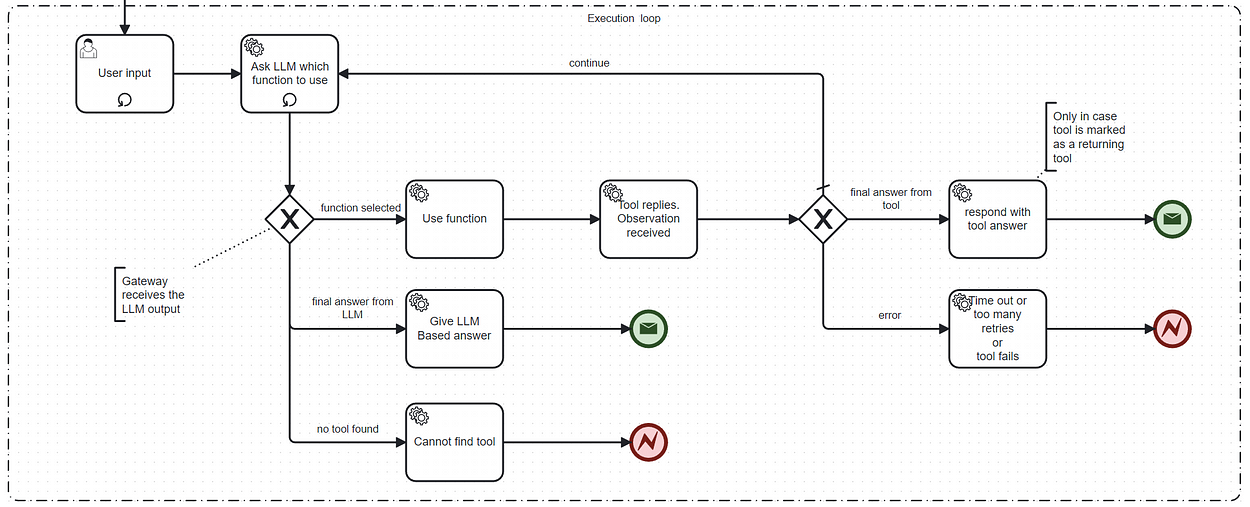

As far as the author of the blog could see the agent loop is the same as for most agents. The basic agents code is similar to the ZERO_SHOT_REACT_DESCRIPTION agent. So the agent loop still could be depicted with this diagramme:

The agent loop

Custom and LangChain Tools

A LangChain agent uses tools (corresponds to OpenAPI functions). LangChain (v0.0.220) comes out of the box with a plethora of tools which allow you to connect to all kinds of paid and free services or interactions, like e.g:

arxiv (free)

azure_cognitive_services

bing_search

brave_search

ddg_search

file_management

gmail

google_places

google_search

google_serper

graphql

human interaction

jira

json

metaphor_search

office365

openapi

openweathermap

playwright

powerbi

pubmed

python

requests

scenexplain

searx_search

shell

sleep

spark_sql

sql_database

steamship_image_generation

vectorstore

wikipedia (free)

wolfram_alpha

youtube

zapier

We will show in this blog how you can create a custom tool to access a custom REST API.

Conversational Memory

This type of memory comes in handy when you want to remember items from previous inputs. For example: if you ask “Who is Albert Einstein?” and then subsequently “Who were his mentors?”, then conversational memory will help the agent to remember that “his” refers to “Albert Einstein”.

Here is LangChain’s documentation on Memory.

An Agent with Functions, Custom Tool and Memory

Our agent can be found in a Git repository:

GitHub - gilfernandes/chat_functions: Simple playground chat app that interacts with OpenAI's…

Simple playground chat app that interacts with OpenAI's functions with memory and custom tools. - GitHub …

In order to get it to run, please install Conda first.

Then create the following environment and install the following libraries:

conda activate langchain_streamlit

pip install langchain

pip install prompt_toolkit

pip install wikipedia

pip install arxiv

pip install python-dotenv

pip install streamlit

pip install openai

pip install duckduckgo-search

Then create an .env file with this content:

OPENAI_API_KEY=<key>

Then you can run the command line version of the agent using:

python .\agent_cli.py

And the Streamlit version can be run with this command on port 8080:

streamlit run ./agent_streamlit.py --server.port 8080

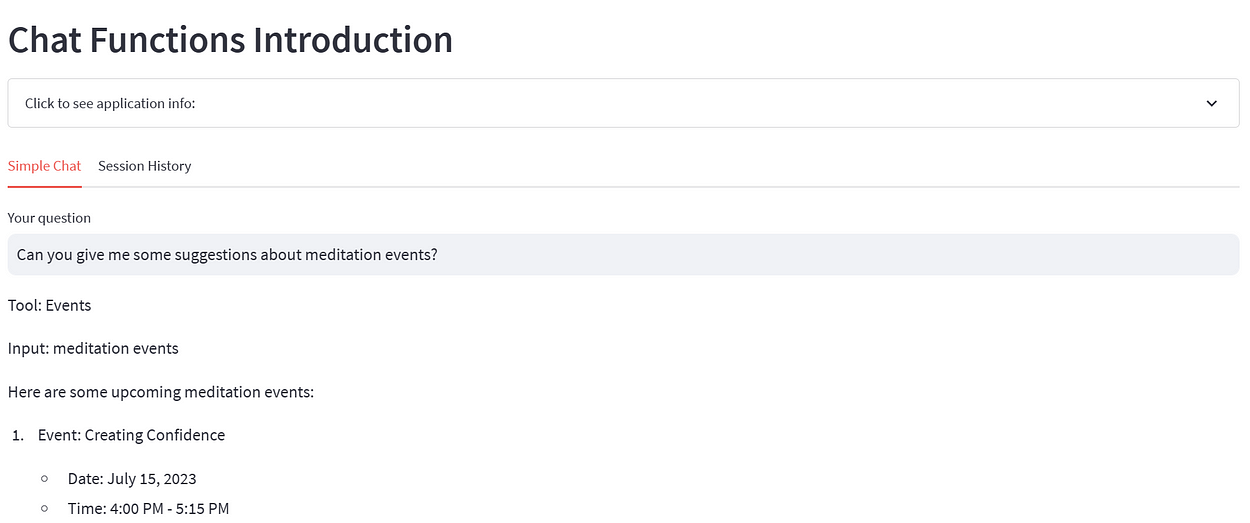

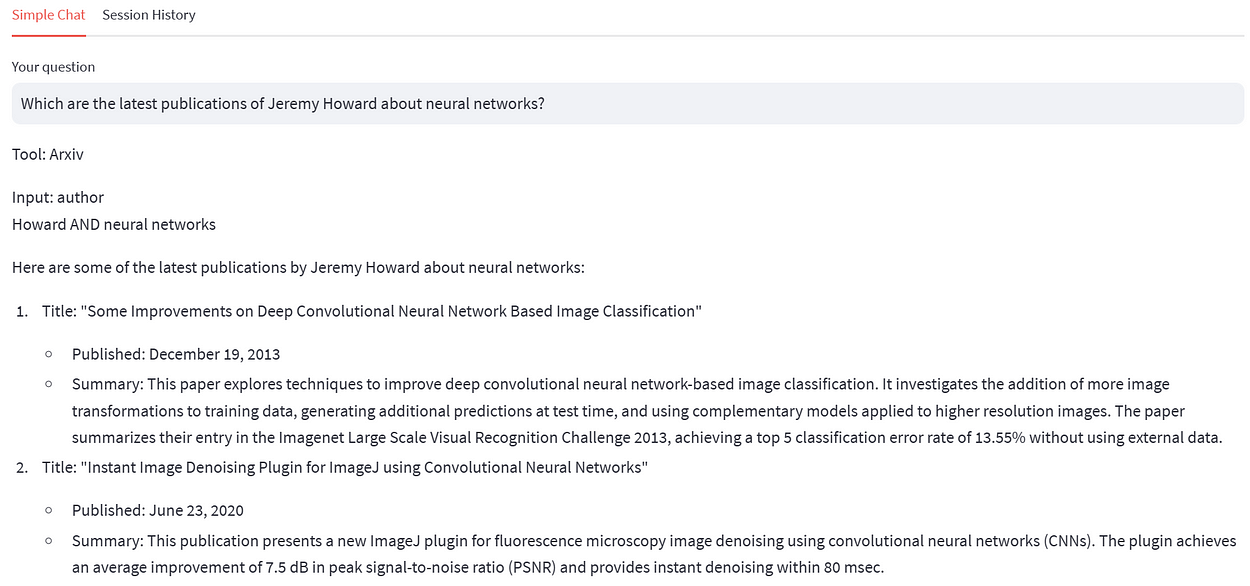

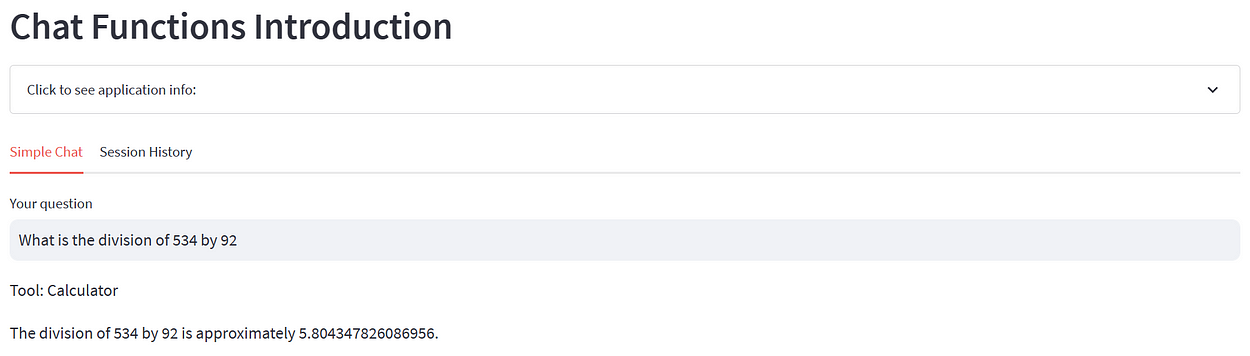

Here are some screenshots of the tool:

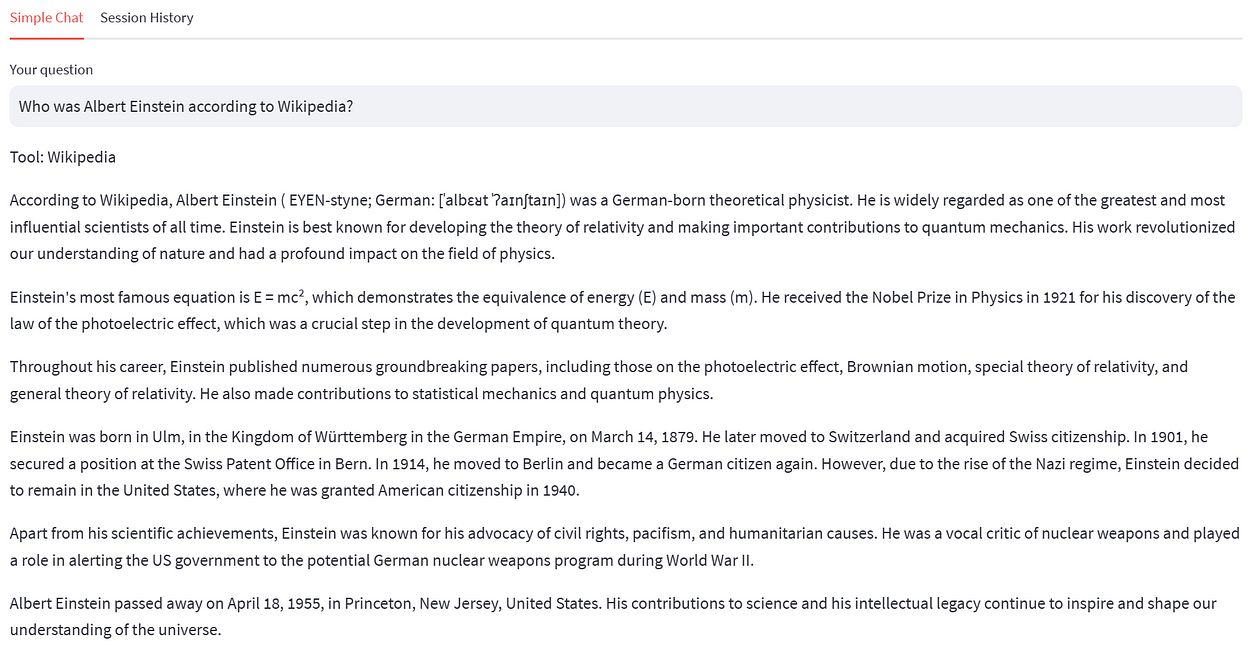

Using a custom agent

The UI shows which tool (OpenAI function) is being used and which input was sent to it.

Using Arxiv Tool

Using the calculator

Using Wikipedia

Code Details

https://github.com/gilfernandes/chat_functions?source=post_page-----e34daa331aa7--------------------------------

Summary

OpenAI functions can now be easily used with LangChain. And this seems to be a much better method (faster and more accurate) to create agents compared to prompt based approaches.

OpenAI functions can also be easily integrated with memory and custom tools too. There is no excuse to not use OpenAI functions for the type of agent described in this blog.